This will be a quick overview of my current homelab after some of the recent consolidation and upgrades.

To start off I have a custom (handmade) server rack made to hold all of the equipment. I use trays to hold auxiliary equipment such as keyboards, spare disk for quick replacement and other hardware. Plan is to move my desktop to one of these shelves on my next upgrade.

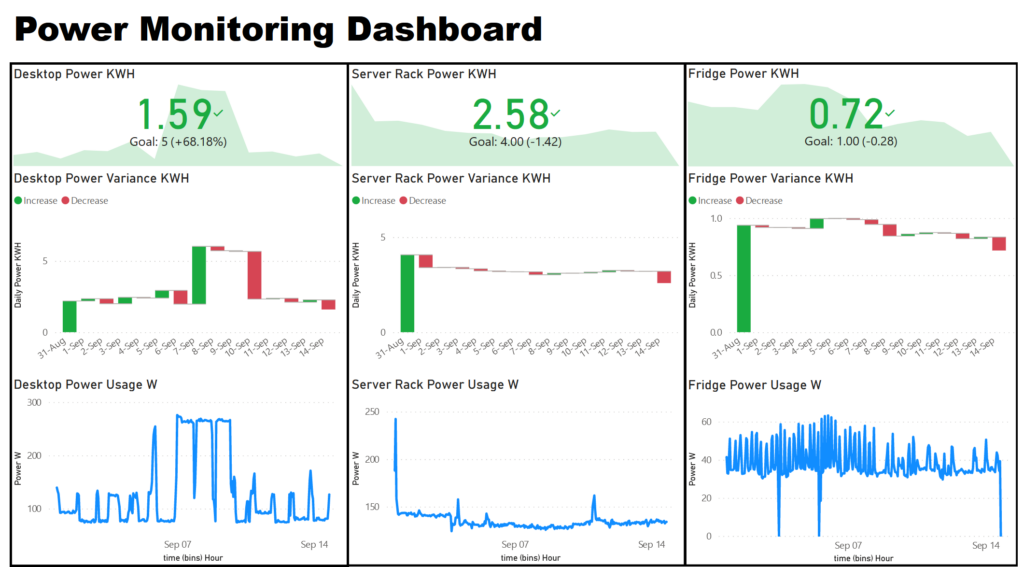

I have a 1500VA UPS https://www.apc.com/us/en/product/BX1500M/apc-back-ups-1500-compact-tower-1500va-120v-avr-lcd-10-nema-outlets-5-surge/ that meets my needs and covers any power disruptions. I have a RJ45 data-cable connected to my server to auto email on any outages and initiate shutdowns if needed.

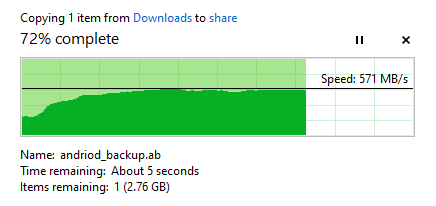

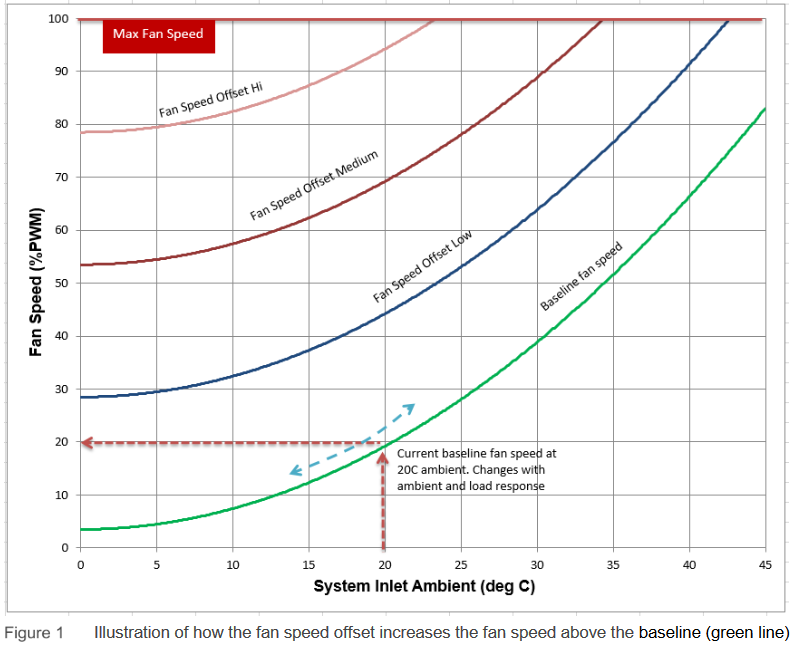

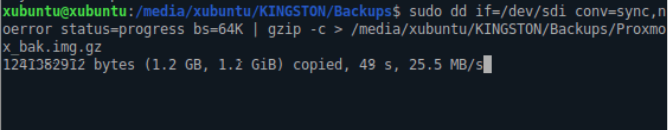

For my main workhorse I have a DELL R530 server with 8 3.5 inch bays and a 10G nic. This handles a bulk of my file store, database and processing needs. I run Proxmox as my host and various debain based distributions on my VMs.

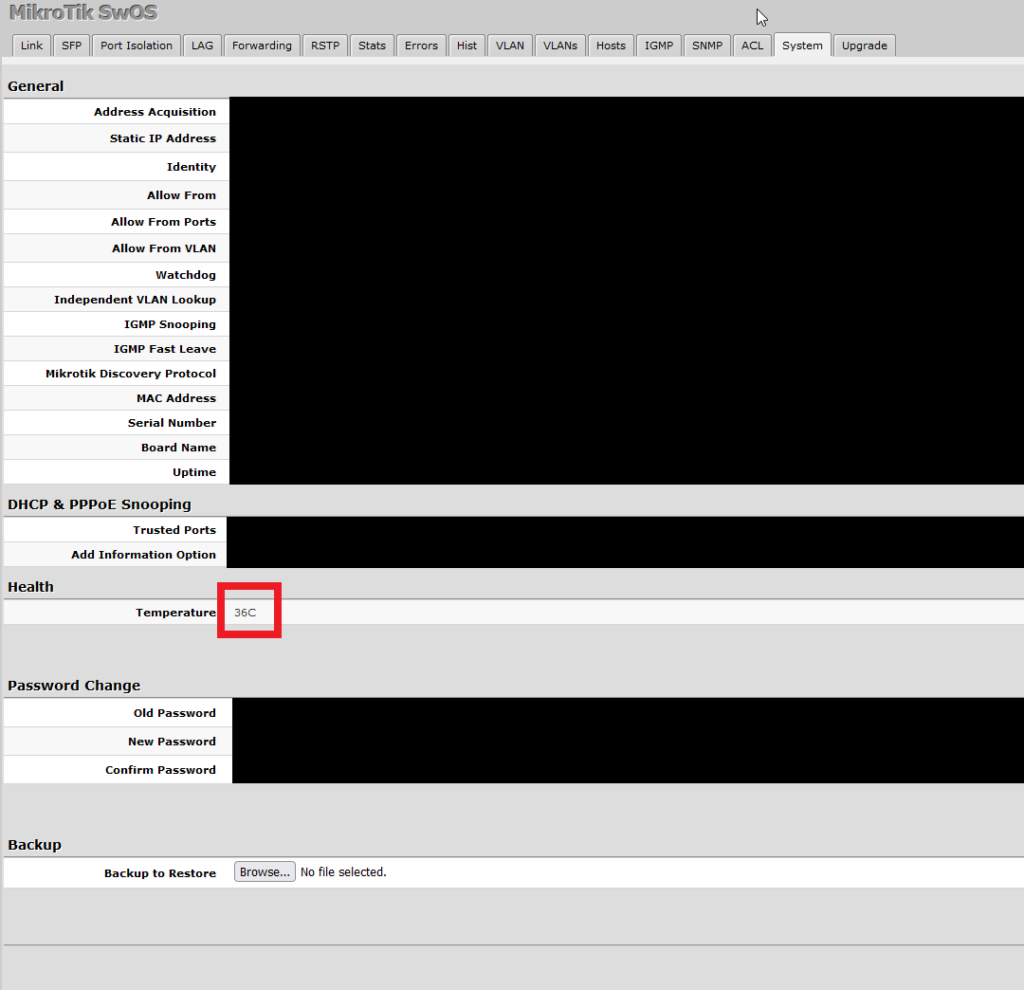

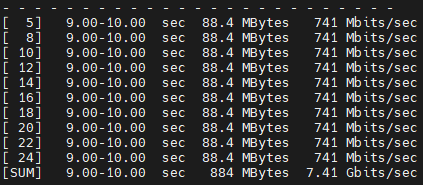

Lastly for my switch I have a Mikrotik CSS326-24G-2S+ which has 2 SFP+ ports that can handle 10Gbps which I use for my server and workstation. For the server I use a cat 6 patch cable and for my workstation I use a fibre optic cable as cat 6 can’t handle the distance. The remaining 24 1Gbps ports are used for various devices.